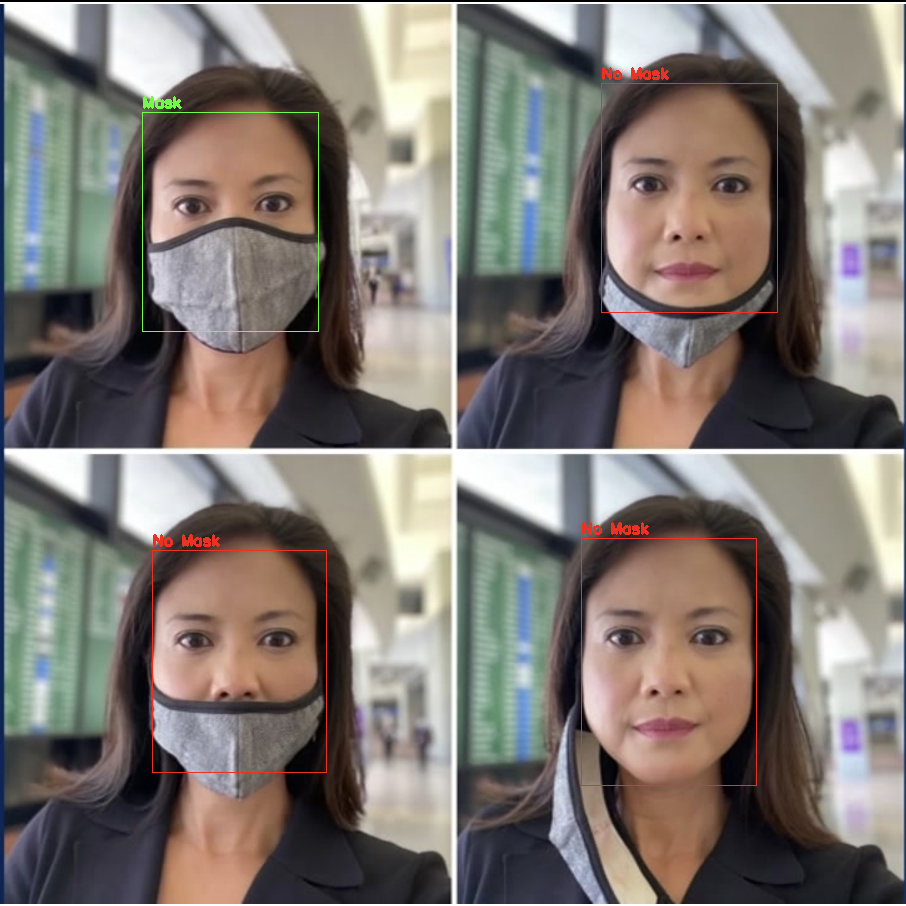

Detect Face Masks

In Photos Using

Computer Vision

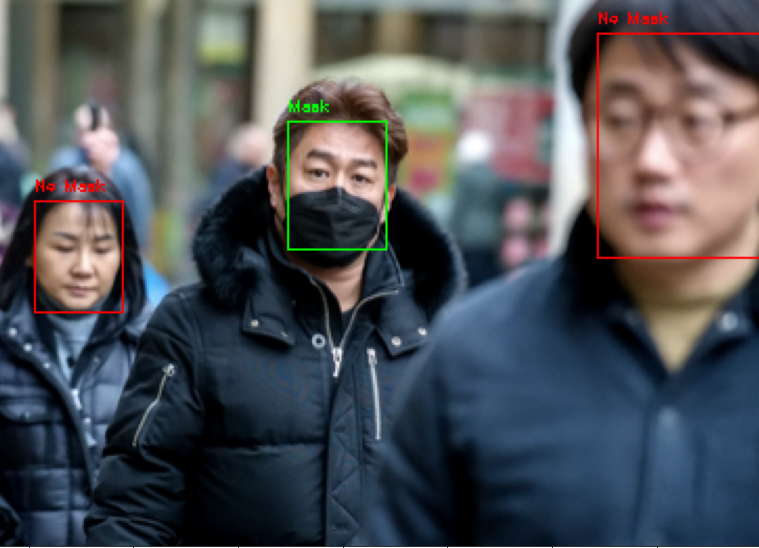

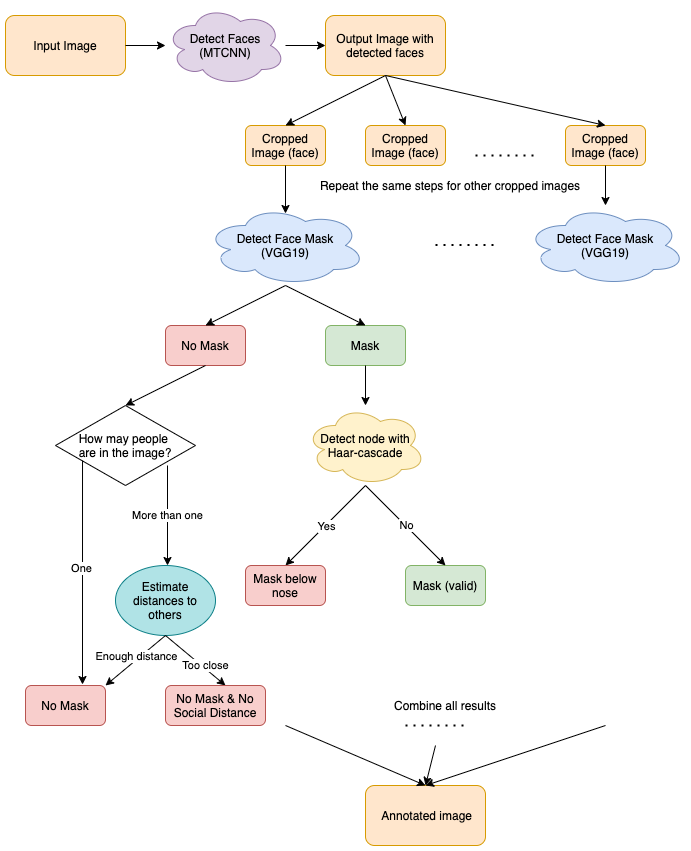

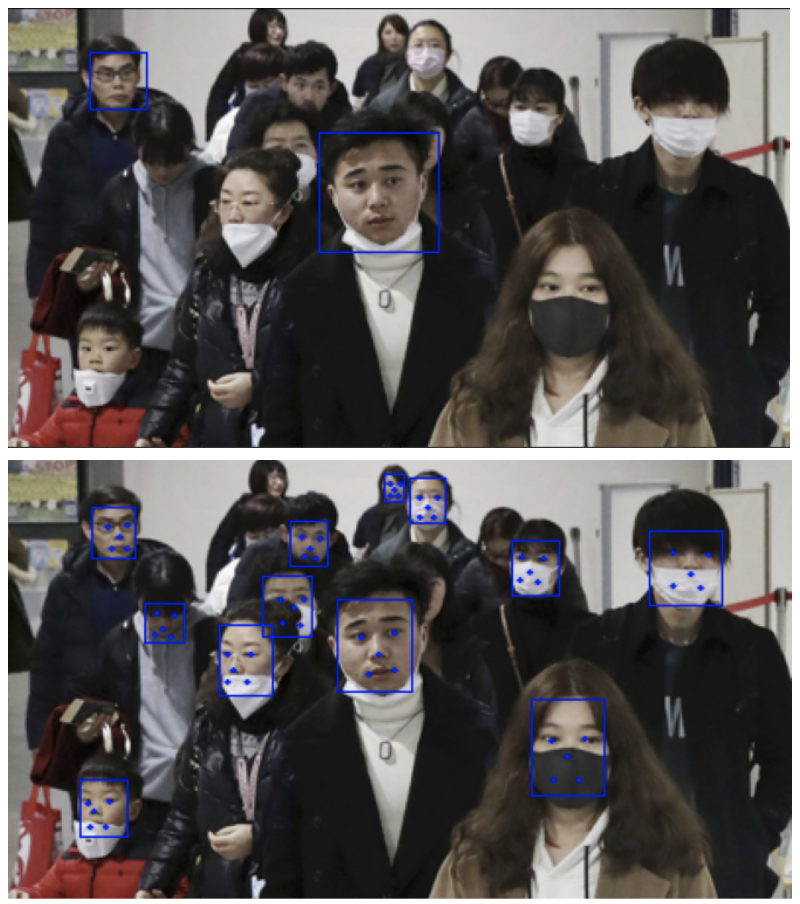

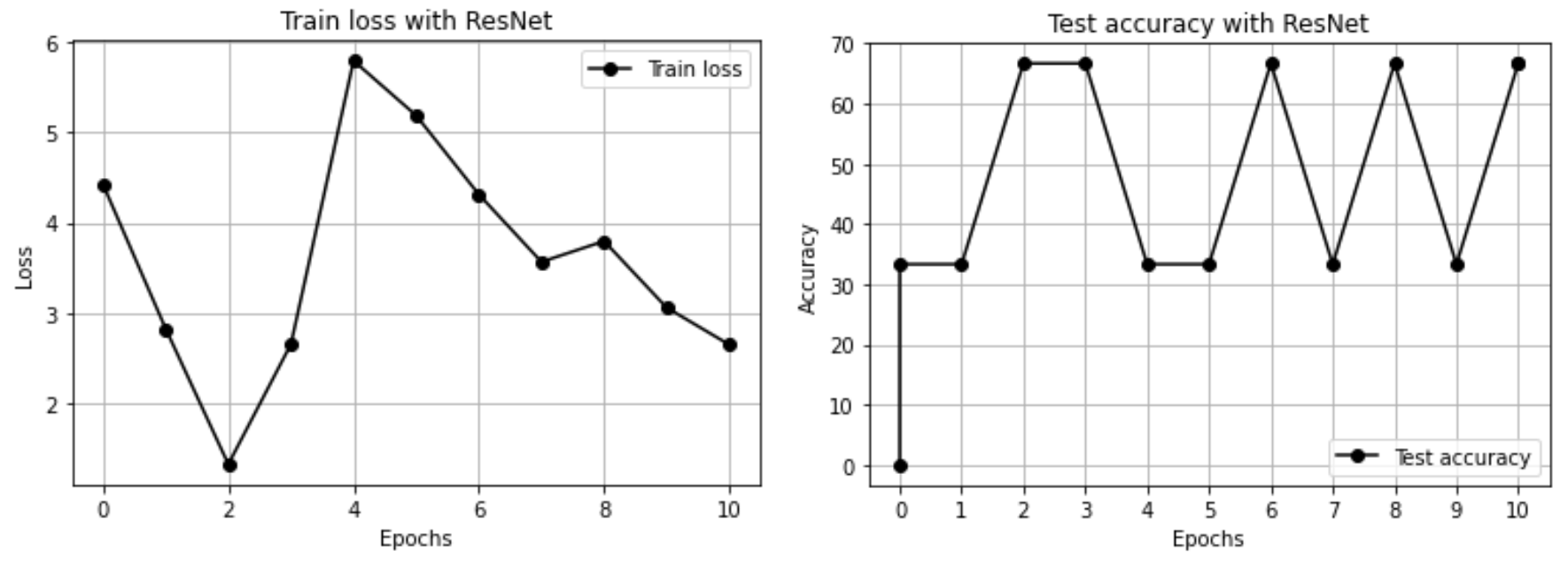

It has been more than a year since the WHO declared a pandemic on March 11, 2020. According to the WHO, there have been more than 170 million confirmed cases of COVID-19 globally, including 3.69 million deaths. The importance of wearing a face mask in everyday life is emphasized since COVID-19 virus spreads through respiratory droplets. According to the scientific journal the Lancet, the effect of reducing the risk of infection is about 85 percent if the mask is properly worn. In relation to that, we were curious whether we can build a model that detects people in images wearing face masks or not? If people in the image are wearing a face mask, can the model detect if the mask is properly worn? We used Face Mask 12k Kaggle Dataset to get photos of people with face masks and without face masks.